LiDAR system helps robots, autonomous vehicles see better

University of Washington researchers have developed a LiDAR system that could help self-driving cars see distant objects with clarity and precision.

Machine vision insights

- University of Washington researchers have developed a LiDAR system that could help self-driving cars see distant objects with clarity and precision.

- In addition to make autonomous vehicles safer for everyday use, they also can help robots and other machines that need to “see” in challenging environments, making them safer for more applications.

l虽然已经取得重大进展ast several years, achieving fully autonomous vehicles has beenmore challenging超过预期。最大的挑战之一是safety. Without a human being at the helm, self-driving cars somehow need to be able to discern and distinguish objects in their path, including pedestrians and other vehicles. The car must be able to accurately assess distances as far as 300 meters and decide whether there is a need to turn, slow down, or stop.

Light detection and ranging (LiDAR) is a 3D-imaging technology often used for this purpose in self-driving cars, because of its superior resolution and range as compared to other types of imaging sensors and devices. Many manufacturers today are working toward developing more cost-effective, compact LiDAR systems, and virtually all producers of self-driving cars consider LiDAR to bea key, enabling technology.

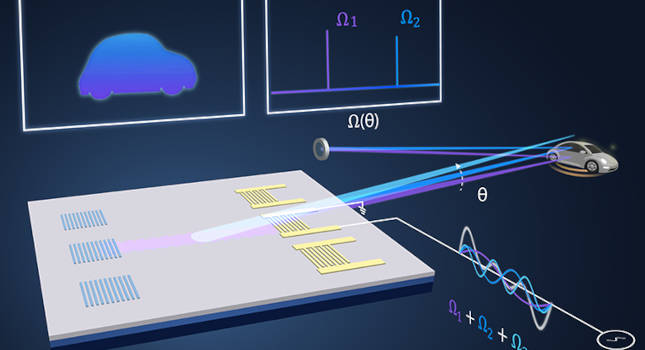

Now, as described ina paper published in the journal Nature, a UW ECE research team has invented a LiDAR system that could help self-driving cars “see” distant objects with clarity and precision. At the core of their innovation is a laser beam-steering device that is roughly 1,000 times smaller than its counterparts currently in the marketplace. It is integrated into a computer chip, which makes it compact, sturdy, relatively easy to fabricate and cheap to produce. It also has a wide range of other potential applications, including use in robotic systems found in agriculture, global supply chains, and medical imaging.

The research team behind this invention was led by UW ECE and Physics ProfessorMo Li, who is the Department’sassociate chair for researchand senior author of the Nature paper.

“We have invented a completely new type of laser beam-steering device without any moving parts for scanning LiDAR systems and integrated it into a computer chip,” Professor Li said, “This new technology uses sound running on the surface of the chip to steer a scanning laser into free space. It can detect and image objects in three dimensions from over 100 meters away.”

Co-lead authors of the Nature paper areBingzhao Li, a postdoctoral scholar andQixuan Lin, a UW ECE graduate student, who are both part of theLaboratory of Photonic Systemsthat Professor Li leads at the UW. The two of them conducted most of the experimental work with oversight and guidance from Professor Li.

A UW ECE research team led by UW ECE and Physics Professor Mo Li has invented a new type of light detection and ranging, or LiDAR, technology that helps autonomous vehicles “see” distant objects. This form of LiDAR is integrated onto a computer chip. The chip uses sound waves running over its surface to steer a laser beam like a searchlight, so self-driving cars can detect and discern faraway objects, such as pedestrians and other vehicles. Courtesy: Bingzhao Li and Qixuan Lin, University of Washington

Steering light with sound

The research team developed this technology specifically for chip-based scanning LiDAR systems, in which a single, powerful (but eye-safe) laser beam is sent out from the system to scan for objects far in the distance. For self-driving cars, safety regulations require the beam to reach between 200 to 300 m, which is approximately the distance a vehicle traveling faster than 60 miles per hour needs to safely turn or stop before crashing into an object.

One of the key challenges for this type of scanning LiDAR system is reliably guiding the beam out of the chip and into free space, so it can quickly and accurately scan objects that are far away, like a searchlight. Various beam-steering technologies have been developed over the years to do this; however, because most of them rely on an intricate assemblage of movable mechanical parts (for example, a fast-rotating polygon equipped with mirrors to reflect the laser beam), many of these mechanical devices continue to be bulky, fragile, and expensive to produce. By comparison, the research team’s solution is completely non-mechanical. That is, their beam-steering device has no moving mechanical parts, because it is fully integrated into a solid-state computer chip.

To achieve this tight integration, the team developed an innovative technique, called “acousto-optic beam steering” for guiding the scanning laser beam by sending sound wave pulses across the surface of the computer chip. The sound wave has a very high frequency of a few gigahertz, similar to what is used in wireless communication, so it is not audible.

“At a quantum physics level, the particles in the sound waves (phonons) collide with the particles in the laser beam (photons) on the surface of the chip, and their energy combines,” Bingzhao Li explained. “We precisely adjust the frequency of the sound waves to direct the beam into different directions in the free space above the chip and far into the distance.”

In order to render accurate images of objects in the distance, this technology also exploits a physical principle called “Brillouin scattering,” in which photons in the laser beam steered at different angles are each labeled with unique frequency changes. With this technique, only one receiver is needed to decode the information coming back to the device from the scanning laser beam. This enabled the research team to accurately render images of objects far away while also significantly shrinking the overall size of the LiDAR system.

“我们可以告诉反射激光的方向from its ‘color,’ a method we named ‘frequency-angular resolving,’” Qixuan Lin said. “Our receiver only needs a single imaging pixel, rather than a full camera, to image objects far away. Therefore, it is much smaller and cheaper than the LiDAR receivers commonly used today.”

The small size of their acousto-optic beam-steering device, along with the fact that it is integrated into a solid-state computer chip, makes it much more compact and sturdier than its mechanical counterparts. It also makes it possible to reduce fabrication costs and shrink the size of the LiDAR system that encompasses the beam-steering chip. This holds the potential to shrink the entire scanning LiDAR system from about the size of a large coffee mug to that of a small matchbox.

“I think an important point to make is that there are a lot of applications for LiDAR that are still to be discovered.” Professor Li said. “If scanning LiDAR systems using our technology were to become very compact and more affordable, then the systems could be mounted almost anywhere — on cars, robots, or drones. That would lead to ubiquitous deployment of scanning LiDAR technology and encourage its use in an even wider range of applications.”

Moving into the marketplace

Next steps for the research team include improving the performance of their device and increasing its scanning distance from 115 meters to 300 m. Within one year, the team aims to develop a prototype that can be taken out of their lab and into bustling streets for real-world image testing. Bingzhao Li and Professor Li are also planning to form a start-up company to commercialize their technology within two to three years. They have already received seed grants for this purpose fromUW CoMotionand theWashington Research Foundation.

Professor Li said he was proud of what his small research team accomplished at UW ECE within a relatively short amount of time.

“The fact that it only took two students to make this happen in about a nine-month period speaks to the beauty and the simplicity of the technology,” he said. “At its core, this device is not too complicated. It is a straightforward implementation of a good idea, and it works.”

Do you have experience and expertise with the topics mentioned in this content? You should consider contributing to our CFE Media editorial team and getting the recognition you and your company deserve. Clickhereto start this process.